Nursing Magnet Hospitals Have Better CMS Hospital Compare Ratings

Saturday, November 4, 2017 at 8:00AM

Saturday, November 4, 2017 at 8:00AM Richard A. Robbins, MD

Phoenix Pulmonary and Critical Care Research and Education Foundation

Gilbert, AZ USA

Abstract

Background: There has been conflicting data on whether Nursing Magnet Hospitals (NMH) provide better care.

Methods: NMH in the Southwest USA (Arizona, California, Colorado, Hawaii, Nevada, and New Mexico) were compared to hospitals not designated as NMH using the Centers for Medicare and Medicaid (CMS) hospital compare star designation.

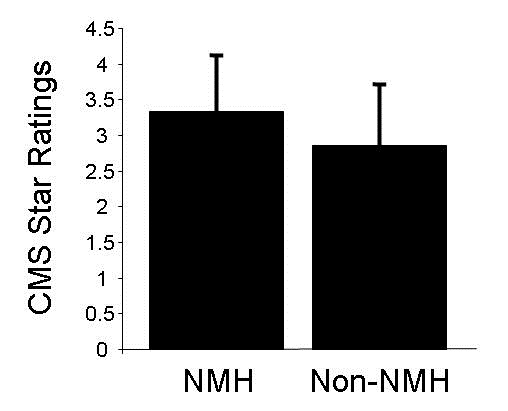

Results: NMH had higher star ratings than non-NMH hospitals (3.34 + 0.78 vs. 2.86 + 0.83, p<0.001). The hospitals were mostly large, urban non-critical access hospitals. Academic medical centers made up a disproportionately large portion of the NMH.

Conclusions: Although NMH had higher hospital ratings, the data may favor non-critical access academic medical centers which are known to have better outcomes.

Introduction

Magnet status is awarded to hospitals that meet a set of criteria designed to measure nursing quality by the American Nurses' Credentialing Center (ANCC), a part of the American Nurses Association (ANA). The Magnet designation program was based on a 1983 ANA survey of 163 hospitals deriving its key principles from the hospitals that had the best nursing performance. The prime intention was to help hospitals and healthcare facilities attract and retain top nursing talent.

There is no consensus whether Magnet status has an impact on nurse retention or on clinical outcomes. Kelly et al. (1) found that NMH hospitals provide better work environments and a more highly educated nursing workforce than non-NMH. In contrast, Trinkoff et al. (2) found no significant difference in working conditions between NHM and non-NMH. To further confuse the picture, Goode et al. (3) reported that NMH generally had poorer outcomes.

The Centers for Medicare and Medicaid Services (CMS) has developed star ratings in an attempt to measure quality of care (4). The ratings are based on five broad categories: 1. Outcomes; 2. Intermediate Outcomes; 3. Patient Experience; 4. Access; and 5. Process. Outcomes and intermediate outcomes are weighted three times as much as process measures, and patient experience and access measures are weighted 1.5 times as much as process measures. The ratings are from 1-5 stars with higher numbers of stars indicating a higher quality rating.

This study compares the CMS star ratings between NMH and non-NMH in the Southwest USA (Arizona, California, Colorado, Hawaii, Nevada and New Mexico). The results demonstrate that NMH have higher CMS star ratings. However, the NMH have characteristics which have been previously associated with higher quality of care using some measures.

Methods

Nursing Magnet Hospitals

NMH were identified from The American Nurses Credentialing Center website (5).

CMS Star Ratings

Star ratings were obtained from the CMS website (4).

Statistics

Only when data was available for both NMH and CMS star ratings were the hospitals included. Data was expressed as mean + standard deviation. NMH and non-NMH were compared using Student’s t test. Significance was defined as p<0.05.

Results

Hospital Characteristics

There were 44 NMH and 415 non-NMH hospitals in the data (see Appendix). California had the most hospitals (287) and the most NMH (28). Arizona had 8 NMH, Colorado 7 and Hawaii 1. Nevada and New Mexico had none. All the NMH were acute care hospitals located in major metropolitan areas. Most were larger hospitals. None were designated critical access hospitals by CMS. Eleven of the NMH were the primary teaching hospitals for medical schools. Many of the others had affiliated teaching programs.

CMS Star Ratings

The CMS star ratings were higher for NMH than non NMH (3.34 + 0.78 vs. 2.86 + 0.83, p<0.001, Figure 1).

Figure 1. CMS star ratings for Nurse Magnet Hospitals (NMH) and non-NMH (p<0.001).

Discussion

The present study shows that for hospitals in the Southwest, NMH had higher CMS star ratings than non-NMH. This is consistent with better levels of care in NMH than non-NMH. However, the NMH were large, urban, non-critical access medical centers which were disproportionately academic medical centers. Previous studies have shown that these hospitals have better outcomes (6,7).

There seems to be little consensus in the literature regarding patient outcomes in NMH. A 2010 study concluded that non-NMH actually had better patient outcomes than NMH (3). Similarly, studies published early in this decade suggested little difference in outcomes (1,2). In contrast, a more recent study suggested improvements in patient outcomes in NMH (8). The present study supports the concept that NMH status might be a marker for better patient outcomes.

Achieving NMH status is expensive. Hospitals pay about $2 million for initial NMH certification, and pay nearly the same amount for re-certification every 4 years. It seems unlikely that small rural hospitals could afford the fee to achieve and maintain NMH regardless of their quality of care. Therefore, the NMH would be expected to be larger, urban medical centers which were the results found in the present study.

Despite there being no direct link of NMH to reimbursement, a study by the Robert Wood Johnson Foundation suggests that achieving NMH status increased hospital revenue (9). On average, NMH received an adjusted net increase in inpatient income of about $104 to $127 per discharge after earning Magnet status, amounting to about $1.2 million in revenue each year. The reason(s) for the improvement in hospital fiscal status are unclear.

Measuring quality of care is quite complex. The CMS star ratings are an attempt to summarize the quality of care using 5 broad categories: 1. Outcomes; 2. Intermediate Outcomes; 3. Patient Experience; 4. Access; and 5. Process. There are up to 32 measures in each category. Outcomes, patient experience and access seem relatively straight-forward. An example of a secondary outcome is control of blood pressure because of its link to outcomes. Examples of process measures include colorectal cancer screening, annual flu shot and monitoring physical activity. To further complicate the CMS ratings, each category is weighted.

It is possible that the CMS star ratings might miss or under weigh a key element in quality of care. For example, Needleman et al. (10) has emphasized that increased registered nurse staffing reduces hospital mortality. However, a 2011 study concluded that NMH had less total staff and a lower RN skill mix compared with non-NMH hospitals contributing to poorer outcomes (3).

The present study supports the concept that achieving NMH status is associated with better care as defined by CMS. However, given the complexities of measuring quality of care it is unclear whether this represents a marker of better hospitals or if the process of achieving NMH leads to better care.

References

- Kelly LA, McHugh MD, Aiken LH. Nurse outcomes in Magnet® and non-Magnet hospitals. J Nurs Adm. 2012 Oct;42(10 Suppl):S44-9. [PubMed]

- Trinkoff AM, Johantgen M, Storr CL, Han K, Liang Y, Gurses AP, Hopkinson S. A comparison of working conditions among nurses in Magnet and non-Magnet hospitals. J Nurs Adm. 2010 Jul-Aug;40(7-8):309-15. [CrossRef] [PubMed]

- Goode CJ, Blegen MA, Park SH, Vaughn T, Spetz J. Comparison of patient outcomes in Magnet® and non-Magnet hospitals. J Nurs Adm. 2011 Dec;41(12):517-23. [CrossRef] [PubMed]

- Centers for Medicare and Medicaid. 2017 star ratings. Available at: https://www.cms.gov/Newsroom/MediaReleaseDatabase/Fact-sheets/2016-Fact-sheets-items/2016-10-12.html (accessed 10/15/17).

- The American Nurses Credentialing Center. ANCC List of Magnet® Recognized Hospitals. Available at: http://www.clinicalmanagementconsultants.com/ancc-list-of-magnet-recognized-hospitals--cid-4457.html (accessed 10/15/17).

- Burke LG, Frakt AB, Khullar D, Orav EJ, Jha AK. Association Between Teaching Status and Mortality in US Hospitals. JAMA. 2017 May 23;317(20):2105-13. [CrossRef] [PubMed]

- Joynt KE, Harris Y, Orav EJ, Jha AK. Quality of care and patient outcomes in critical access rural hospitals. JAMA. 2011 Jul 6;306(1):45-52. [CrossRef] [PubMed]

- Friese CR, Xia R, Ghaferi A, Birkmeyer JD, Banerjee M. Hospitals in 'Magnet' program show better patient outcomes on mortality measures compared to non-'Magnet' hospitals. Health Aff (Millwood). 2015 Jun;34(6):986-92. [CrossRef] [PubMed]

- Jayawardhana J, Welton JM, Lindrooth RC. Is there a business case for magnet hospitals? Estimates of the cost and revenue implications of becoming a magnet. Med Care. 2014 May;52(5):400-6. [CrossRef] [PubMed]

- Needleman J, Buerhaus P, Pankratz VS, Leibson CL, Stevens SR, Harris M. Nurse staffing and inpatient hospital mortality. N Engl J Med. 2011 Mar 17;364(11):1037-45.[CrossRef] [PubMed]

Cite as: Robbins RA. Nursing magnet hospitals have better CMS hospital compare ratings. Southwest J Pulm Crit Care. 2017;15(5):209-13. doi: https://doi.org/10.13175/swjpcc128-17 PDF